In the field of artificial intelligence, Large Language Models (LLMs) such as GPT-4 stand out as a major innovation, proving useful in a range of areas including automated customer support and creative content generation. Nonetheless, there exists a notable challenge in leveraging the capabilities of these models while also maintaining data privacy. This blog aims to explore the terrain of adjusting LLMs in an ethical manner, affirming that the advancement of artificial intelligence doesn’t infringe on personal privacy.

Understanding LLM Fine-Tuning

For many businesses, getting high quality answers and analysis requires deep domain knowledge of the organization, the industry in which it operates and its customers. Despite the tremendous potential of LLMs, without further augmentation, they lack the ability to solve key business challenges. Imagine deploying an LLM for a customer service chatbot in a call center: while a generic model may possess a broad understanding of language, it might lack the industry-specific jargon, compliance adherence, and contextual understanding pivotal to effectively service queries in, say, the financial or healthcare sector. Similarly, an internal IT helpdesk bot must understand the specific systems, software, and common issues within a particular company to provide swift and accurate resolutions. Thus, fine-tuning, steered by domain expertise, adapts an LLM to comprehend and generate responses that resonate with the particularities and nuances of specific industries or functions, thereby elevating its practical utility and efficacy in real-world applications.

Contrasting fine-tuning with prompt engineering unveils distinct approaches toward leveraging LLMs. While prompt engineering involves crafting inputs (prompts) in a manner that guides the model towards generating desired outputs without altering its parameters, fine-tuning involves retraining the model on specific data, thereby modifying its weights to enhance its proficiency in specialized domains. While prompt engineering can direct a model’s responses, fine-tuning ingrains the specialized knowledge and contextual understanding into the model, enabling it to autonomously generate responses that are inherently aligned with the domain-specific requirements and norms. Ultimately, both approaches help to augment LLMs to incorporate domain specific knowledge.

Challenges in Fine-Tuning

While fine-tuning elevates model performance, it presents obstacles, especially concerning data handling and management. Navigating through the intricacies of fine-tuning, the risk of inadvertently incorporating Personally Identifiable Information (PII) into the training process stands out as a key challenge, presenting not just ethical but also tangible business risks. In a practical application, such as an automated email response system or a customer service chatbot, the model might generate responses that include or resemble actual personal data, thereby risking privacy breaches and potentially resulting in severe legal and repetitional damages. For instance, a financial institution utilizing an LLM to automate customer communications could inadvertently expose sensitive customer data, like account details or transaction histories, if the model was fine-tuned with datasets containing un-redacted PII, thereby not only breaching trust but also potentially violating data protection regulations like the GDPR.

Moreover, inherent biases in the data utilized for fine-tuning can also perpetuate and amplify prejudiced outputs, which is especially concerning in applications where fairness and impartiality are crucial. Imagine an HR chatbot, fine-tuned on historical company data, being utilized to screen applicants or respond to employee queries. If the training data contains inherent biases, such as gender or racial biases in promotion or hiring data, the fine-tuned model could inadvertently perpetuate these biases, providing biased responses or recommendations. This not only contradicts the ethical imperatives of fairness but also jeopardizes organizational commitments to diversity and inclusivity, thereby potentially alienating customers, employees, and stakeholders, and exposing the organization to ethical scrutiny and legal repercussions.

Safeguarding Personally Identifiable Information (PII), such as names, addresses, and social security numbers, is paramount. Mishandling PII can not only tarnish organizational reputation but also potentially result in severe legal ramifications.

Redaction: A Critical Step in Ensuring Privacy

PII encompasses any information that can be used to identify an individual and redaction is an important tool in obfuscating sensitive PII data. Redaction is the process of obscuring or removing sensitive information from a document prior to its publication, distribution, or release. When combined with training data, redaction can ensure that models are trained without compromising individual privacy. The cruciality of redacting PII, especially during the fine-tuning of LLMs, is underscored by the imperative to shield individual privacy and comply with prevailing data protection legislations, ensuring that the models are not only effective but also ethically and legally compliant.

Historically, redacting PII from unstructured data, such as text documents, emails, or customer interactions, has been a convoluted task, marred by the complexities and variabilities inherent in natural language. Traditional methods often demanded meticulous manual reviews, a labor-intensive and error-prone process. However, the advent of AI and machine learning technologies has ushered in transformative capabilities, enabling automated identification and redaction of PII. AI models, trained to discern and obfuscate sensitive information within torrents of unstructured data, offer a potent tool to safeguard PII, mitigating risks and augmenting the efficiency and accuracy of the redaction process. The amalgamation of advanced AI-driven redaction with human oversight forms a robust shield, safeguarding privacy while harnessing the vast potentials embedded within data.

Practical Steps for Ethical Fine-Tuning

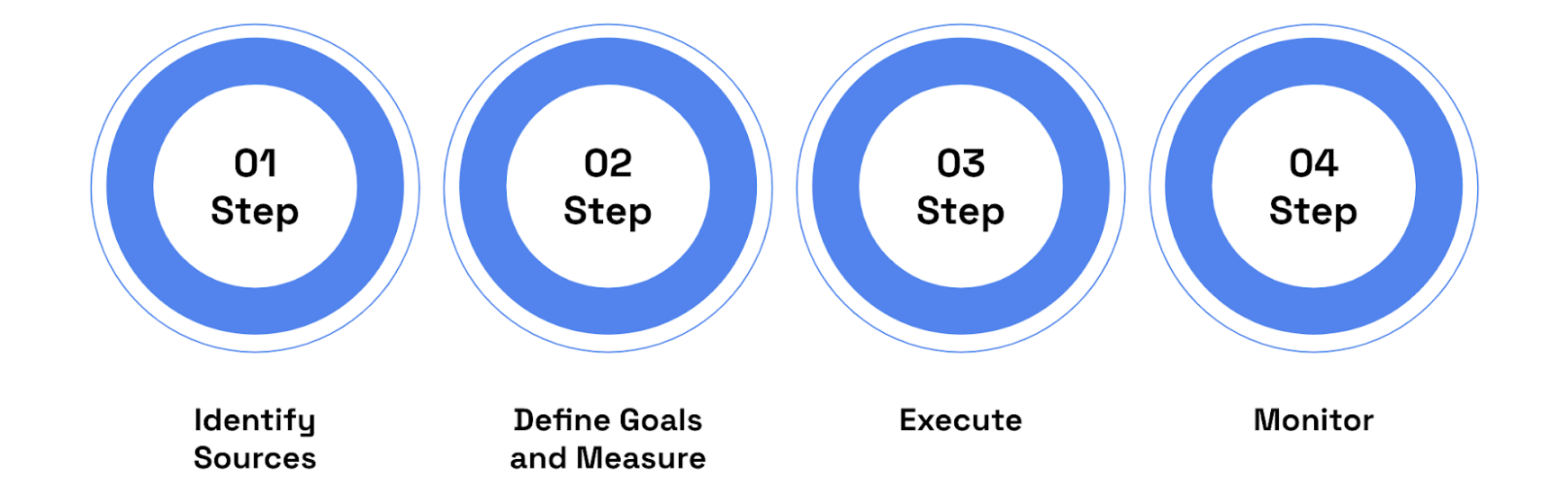

Embracing the essence of ethical fine-tuning while navigating through the labyrinthine alleys of data privacy and redaction mandates a structured and pragmatic framework. Embarking on this journey necessitates weaving redaction seamlessly into the fine-tuning process, ensuring that the resultant models are not only proficient but also staunch guardians of privacy.

- Identify Sources of Data and PII Considerations/Requirements: The genesis of ethical fine-tuning lies in the meticulous identification of data sources, alongside a thorough analysis of the inherent PII considerations and regulatory requirements. Employing AI models that can sift through voluminous data, identifying and cataloging PII, enables organizations to comprehend the privacy landscape embedded within their data. This not only elucidates the scope and nature of the redaction required but also ensures alignment with legal and ethical benchmarks.

- Defining Goals and Measures: Charting a clear trajectory involves establishing well-defined goals and measures, ensuring that fine-tuning is not only aligned with organizational objectives but also steadfastly adheres to privacy imperatives. Goals could encompass achieving enhanced model performance in specific domains while measures should delineate the acceptable limits of data usage and the efficacy of redaction, ensuring that no PII permeates into the fine-tuned model.

- Executing: The execution phase involves deploying AI-driven redaction models to automatically identify and obfuscate PII within the identified data sources, followed by the fine-tuning of the LLM. Utilizing AI for redaction minimizes the dependency on labor-intensive manual reviews and augments the accuracy and efficiency of the process. Subsequently, the fine-tuning of the LLM should be guided by the defined goals and measures, ensuring that the model evolves within the demarcated ethical and privacy boundaries.

- Monitoring: Post-deployment, continuous monitoring utilizing AI tools that can detect and alert regarding any inadvertent PII exposures or biased outputs ensures that the model operates within the established ethical and privacy parameters. This ongoing vigilance not only safeguards against potential breaches but also facilitates the iterative refinement of the model, ensuring its sustained alignment with organizational, ethical, and legal standards.

In encapsulation, integrating redaction into the fine-tuning process through a structured framework ensures that LLMs are not only proficient in their functionalities but also unwavering sentinels of data privacy and ethical use. This amalgamation of technological prowess with ethical vigilance paves the way for harnessing the boundless potentials of LLMs without compromising the sanctity of individual privacy.

Conclusion

Embarking on the journey of fine-tuning LLMs, it is pivotal to navigate the privacy paradox with meticulous care, ensuring that the technological advancements forged do not infringe upon the sanctity of individual privacy. Organizations must steadfastly adhere to ethical and privacy-conscious practices, ensuring that the marvels of artificial intelligence are harnessed without compromising moral and legal standards.